Global visual salience

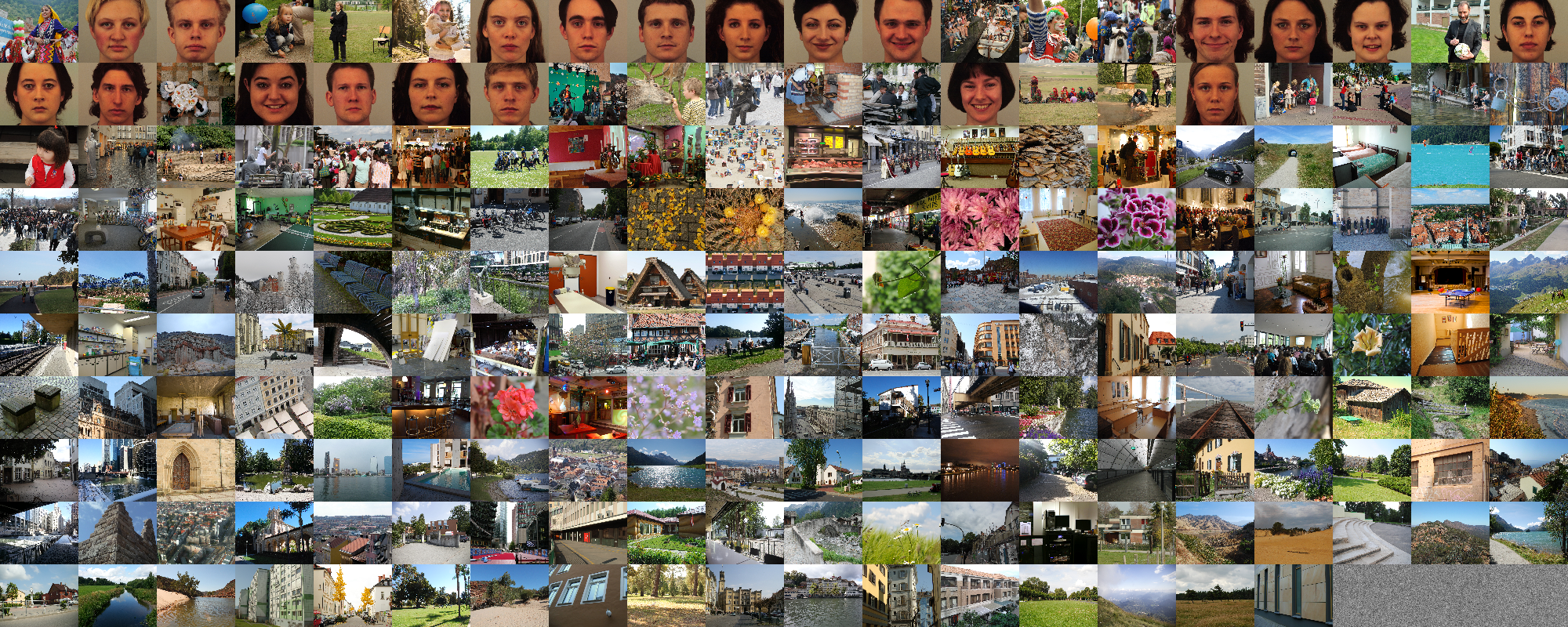

Our pre-print “Global visual salience of competing stimuli” is out on PsyArXiv! We run an eye-tracking experiment showing natural images side by side and trained a machine learning model to predict to direction of the first fixation (left or right) given a pair of images. The coefficients learned for each image characterize the likelihood of each image to attract the first saccade, when shown next to another competing stimulus, which we called global visual salience.

Interestingly, the global visual salience is independent of the local salience maps of the images, that is, the saccadic choice was not driven by local salient properties, but rather by, in part, the semantic content of the images. For instance, we found that faces and images with people have a higher global salience than urban, indoor or natural scenes.

We also confirmed a relatively strong general preference for the left image, although with high variability across participants. Besides, we found no influence of other aspects such as the familiarity with one of the images or the task to determine which of the two images had been seen before.