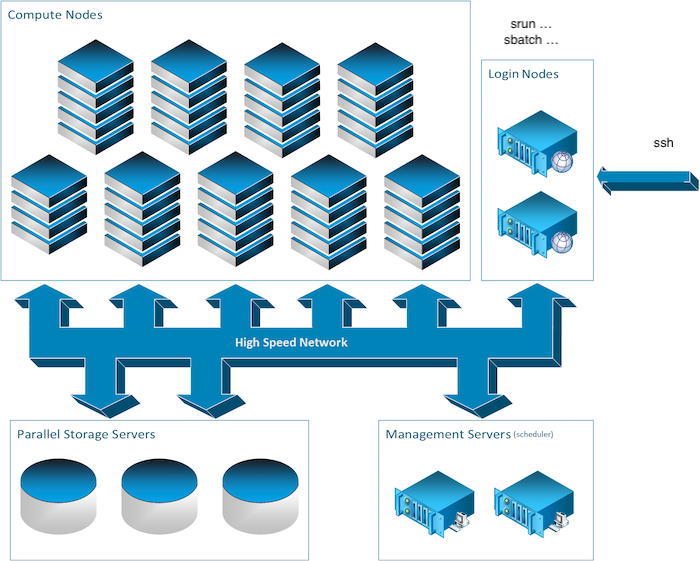

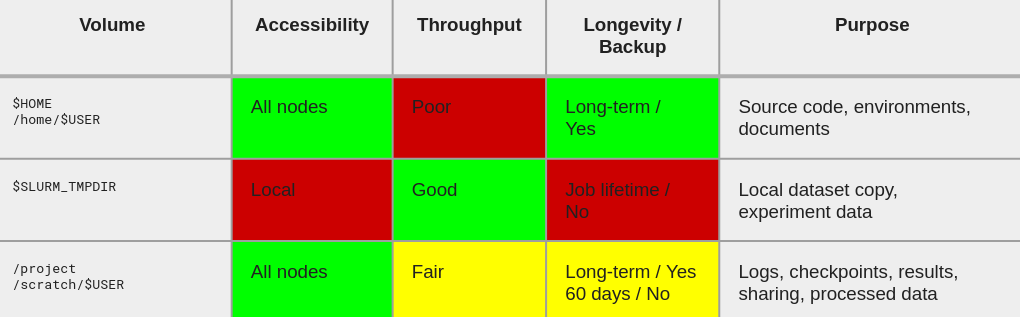

name: title class: title, middle ## IFT 3710/6759 ## Projets (avancés) en apprentissage automatique #### .gray224[20 janvier 2023 - Session 4] ### .gray224[Tutoriel clusters HPC] .smaller[Slides disponibles sur [alexhernandezgarcia.github.io/teaching/mlprojects23/slides/20230120-cluster](https://alexhernandezgarcia.github.io/teaching/mlprojects23/slides/20230120-cluster)] .center[ <a href="http://www.umontreal.ca/"><img src="../../../assets/images/slides/logos/udem-white.png" alt="Mila" style="height: 6em"></a> ] Alex Hernández-García (he/il/él) .footer[[alexhernandezgarcia.github.io](https://alexhernandezgarcia.github.io/) | [alex.hernandez-garcia@mila.quebec](mailto:alex.hernandez-garcia@mila.quebec)]<br> .footer[[@alexhg@scholar.social](https://scholar.social/@alexhg) [](https://scholar.social/@alexhg) | [@alexhdezgcia](https://twitter.com/alexhdezgcia) [](https://twitter.com/alexhdezgcia)] ??? - The class is going to be a mix of lecture and hands-on --- ## Format of the class and objective This class will combine an introduction to the core ideas about HPC clusters with hands-on tutorial. The .highlight1[goal] is that at the end of the class: * You know the core concepts of high-performance computing. * You understand the core idea of computing jobs scheduling. * You know how to run your code in a machine of Compute Canada. * You widen your software development toolbox. -- Question: Have you ever used a compute cluster? Go to [www.menti.com](https://www.menti.com) and use the code **7899 2316** or click on: .center[[https://www.menti.com/5w8213it33](https://www.menti.com/5w8213it33)] ??? I am not an expert. --- ## Why does all this matter? Because you will have access to computational resources from Calcul Québec for the development of your project, very kindly set up by .highligh1[Maxime Boissonneault], responsable des services à la recherche. -- Please take a few minutes to create an account to use the cluster from Calcul Québec. .center[[https://mokey.ift6759.calculquebec.cloud/auth/signup](https://mokey.ift6759.calculquebec.cloud/auth/signup)] .highlight1[Important]: Please use your **first name**, **last name**, and **UdeM email** as it appears on StudiUM. -- Besides this cluster, computational resources are also available via Colab: [colab.research.google.com](https://colab.research.google.com/) --- ## What is HPC? .highlight1[High-performance computing (HPC)] typically refers to supercomputers, that is clusters of computers that provide access to computational resources. A .highlight1[cluster] is a set connected computers that work together such that they can be viewed as a single system. Each computer is typically referred to as a .highlight1[node]. There are computing nodes, storage nodes, login nodes, etc. .left-column[ * **Login nodes**: The entry point to the cluster. * **Compute nodes**: Computers with hardware specialised for high-performance computation, for example GPUs. * **Storage nodes**: Suitable for storing large or multiple files. ] .right-column[ .center[] ] --- ## Compute Canada and Calcul Québec .highlight1[[Compute Canada](https://www.computecanada.ca/)] provides HPC to researchers in Canadian institutions and works closely with regional counterparts such as .highlight1[[Calcul Québec](https://www.calculquebec.ca/)]. Resources: * Compute Canada Wiki: [docs.computecanada.ca](https://docs.computecanada.ca) * Support email: `support@calculquebec.ca` --- ## Cluster _theory_ ### Login nodes * Remote connection to the nodes (via SSH, see later). * Collectively shared. * Gateway into the cluster. * Important: not meant for heavy weight computing tasks. * Typical use: managing source code, environments and data. * Typical use: submitting jobs to the compute nodes. --- ## Cluster _theory_ ### Compute nodes * Computation hardware: * GPUs * RAM * CPUs * Meant for large tasks. * Jobs are submitted to, and run on, the compute nodes. * Shared filesystems are accessible from the compute nodes. --- ## Cluster _theory_ ### Jobs Because resources are limited, jobs in the compute nodes are handled by a _job scheduler_: .highlight1[[Slurm](https://slurm.schedmd.com/)] > Slurm is an open source, fault-tolerant, and highly scalable cluster management and job scheduling system for large and small Linux clusters. * Jobs are _submitted_ from the login node * and _run_ on compute nodes. -- There are two types of jobs: * Interactive: via `salloc`, which allocates resources for a job in real time and spawns a shell. * Batch: via `sbatch`, which submits a job script for later execution. .references[ The [Slurm documentation](https://slurm.schedmd.com/) should become your friend. ] --- ## Cluster _theory_ ### Interactive jobs: `salloc` Though batch submission is the most common and most efficient way to take advantage of Slurm clusters, interactive jobs can be useful for: * Data exploration at the command line. * Interactive IPython, * Software development, debugging, or compiling, Interactive jobs are started with `salloc`, which accepts [multiple options (flags)](https://slurm.schedmd.com/salloc.html). For example: ``` bash $ salloc --time=1:00:00 --mem=2G ``` .references[ Source: [Compute Canada Wiki](https://docs.computecanada.ca/wiki/Running_jobs#Interactive_jobs) ] --- ## Cluster _theory_ ### Interactive jobs: `sbatch` .left-column-66[ [Batch jobs](https://slurm.schedmd.com/sbatch.html) are run unattended by user at a future time scheduled by Slurm. To run a batch job: ``` bash $ sbatch [--slurmargs] script.sh [--scriptargs] ``` The Slurm arguments can go right after the _shebang_ in the script, or directly in the command line. The following is equivalent: ``` bash $ sbatch --time=00:15:00 script.sh Alex ``` ] .right-column-33[ A sample minimal `script.sh` ``` bash #!/bin/bash #SBATCH --time=00:15:00 # Args # $1: name echo "Hello, $1" sleep 10 echo "Bye!" ``` ] .references[ Source: [Compute Canada Wiki](https://docs.computecanada.ca/wiki/Running_jobs#Use_sbatch_to_submit_jobs) ] --- ## Cluster _theory_ ### Job monitoring Other useful Slurm tools are the following: * `squeue -u $USER`: list the queue of current jobs submitted by yourself (`$USER$`) * `scancel <jobid>`: cancel a job * `scontrol show job -dd <jobid>`: info about a running job * `seff <jobid>`: info about a finished job --- ## Cluster _theory_ ### Filesystem .center[] * `$SLURM_TMPDIR`: is the temporary directory used by Slurm with the highest throughput during the job execution. So: copy here as much as you can before running your scripts, write here your outputs and copy them back to a permanent location at the end. * `/scratch/$USER/` is where you can _permanently_ (60 days) store your logs, results, checkpoints, etc. --- ## Cluster _theory_ ### LMod & Software Modules `module` is a shell command that makes needed software available. It works by modifying the `$PATH`, `$LD_LIBRARY_PATH` and other variables to make executables and libraries findable or not. * `module avail`: list available modules * `module spider <module-name>`: search available modules * `module load <name/version>`: load module. For instance: * `module load python/3.9.6` * `module load pytorch/1.8.1` * `module list`: list loaded modules * `module unload <name/version>`: unload module. * `module swap <name-old> <name-new>`: swap modules * `module purge`: unload all modules --- ## Cluster _theory_ ### Python: best practices * Load Python with `module load python/3.x[.y]` * Always use `virtualenv` * Consider building the virtual environment in the compute node: * It improves I/O performance * Use pre-downloaded packages with `module` * Avoid Anaconda: * It handles library management that should be left to Compute Canada admins * It installs in `$HOME` by default and makes tons of files. * It is slower to install packages * It modifies `$HOME/.bashrc` --- ## Cluster _theory_ ### Other good practices (and bad practices) * Do not run code in the login nodes. * Try to not request more resources than you need and for more time you need. * Use archives (`.tar`, `.hdf5`, etc.): do not unpack gazillion files because it makes things slow. * Profile your code. * Use `$SLURM_TMPDIR`: * To build your virtual environment whenever possible. * To copy your data set at the start. * To write your results during execution. --- ## Why does all this matter? Because you will have access to computational resources from Calcul Québec for the development of your project, very kindly set up by .highligh1[Maxime Boissonneault], responsable des services à la recherche. Please take a few minutes to create an account to use the cluster from Calcul Québec. .center[[https://mokey.ift6759.calculquebec.cloud/auth/signup](https://mokey.ift6759.calculquebec.cloud/auth/signup)] .highlight1[Important]: Please use your **first name**, **last name**, and **UdeM email** as it appears on StudiUM. --- name: title class: title, middle ## IFT 3710/6759 ## Projets (avancés) en apprentissage automatique #### .gray224[20 janvier 2023 - Session 4] ### .gray224[Tutoriel clusters HPC] .bigger[.bigger[.highlight1[Pause: 10 minutes]]] .center[ <a href="http://www.umontreal.ca/"><img src="../../../assets/images/slides/logos/udem-white.png" alt="Mila" style="height: 6em"></a> ] Alex Hernández-García (he/il/él) .footer[[alexhernandezgarcia.github.io](https://alexhernandezgarcia.github.io/) | [alex.hernandez-garcia@mila.quebec](mailto:alex.hernandez-garcia@mila.quebec)]<br> .footer[[@alexhg@scholar.social](https://scholar.social/@alexhg) [](https://scholar.social/@alexhg) | [@alexhdezgcia](https://twitter.com/alexhdezgcia) [](https://twitter.com/alexhdezgcia)] --- ## Connect to the cluster ### SSH The Secure Shell Protocol (SSH) is based on a client–server architecture, connecting an SSH client instance with an SSH server. We can use it to connect to our Calcul Québec cluster: ``` bash $ ssh <username>@ift6759.calculquebec.cloud Password: Last login: ... from ... Lmod is automatically replacing "intel/2020.1.217" with "gcc/9.3.0". [<username>@login1 ~]$ ``` Configuring SSH keys for GitHub can take a while. These resources may help: * [Generating a new SSH key and adding it to the ssh-agent](https://docs.github.com/en/authentication/connecting-to-github-with-ssh/generating-a-new-ssh-key-and-adding-it-to-the-ssh-agent) * [About `Error: Permission denied (publickey)`](https://docs.github.com/en/authentication/troubleshooting-ssh/error-permission-denied-publickey) --- ## Connect to the cluster ### `rsync` `rsync` is a fast, versatile, remote (and local) file-copying tool. ``` bash rsync -a dir1/ dir2 rsync -a dir1/ username@remote_host:destination_directory ``` * The trailing slash `/` after `dir1` is necessary if you do not want to place `dir1` inside `dir2`. * `-a`: stands for “archive” and syncs recursively and preserves symbolic links, special and device files, modification times, group, owner, and permissions. * `-v`: verbose * `-n, --dry-run` * `--partial`, `--progress`, `--exclude` .references[ Reference: [How to use rsync to sync local and remote directories, Digital Ocean](https://www.digitalocean.com/community/tutorials/how-to-use-rsync-to-sync-local-and-remote-directories) ] --- ## Customising our cluster shell (optional) ### `bash`, `tmux`, `vim`... I will use my configuration files from `https://github.com/alexhernandezgarcia/linux-config-utils`. .highlight1[Bash] In the cluster shell: ``` bash $ git clone git@github.com:alexhernandezgarcia/linux-config-utils.git $ cp linux-config-utils/bash/.bashrc.cluster ~/.bashrc $ cp linux-config-utils/bash/.bash_aliases ~/ source ~/.bashrc ``` .highlight1[`tmux`] In the cluster shell (the first line is needed only the first time): ``` bash $ git clone git@github.com:alexhernandezgarcia/linux-config-utils.git $ cp linux-config-utils/tmux/.tmux.conf ~/ ``` --- count: false ## Customising our cluster shell (optional) ### `bash`, `tmux`, `vim`... .highlight1[Vim] In the cluster shell (the first line is needed only the first time): ``` bash $ git clone git@github.com:alexhernandezgarcia/linux-config-utils.git $ cp linux-config-utils/vim/.vimrc.cluster ~/.vimrc ``` To set up the Vim plugins with [Vundle](https://github.com/VundleVim/Vundle.vim): ``` bash git clone git@github.com:VundleVim/Vundle.vim.git ~/.vim/bundle/Vundle.vim ``` Then launch `vim` and do `:PluginInstall`. Note that some plugins are commented out (YouCompleteMe and Syntastic) because they require a slightly longer installation. These are great for coding though! --- ## An optional setup of the cluster ### Directories for code, logs, etc. Directories for: * generic `sbatch` scripts * `sbatch` logs in `scratch` * code snapshots in `scratch` ``` bash $ mkdir sbatch $ mkdir scratch/logs $ mkdir scratch/code-snapshots ``` --- ## Toy jobs ### Exercises Create a toy Python script `toy.py`, for instance: ``` python a = 7 b = 6 c = a + b print(c) ``` 1. Create a Python virtual environment in `$HOME` and run the script in the login node. .highlight1[This should not be done! We do it because it's very simple script!] 2. Start an interactive job (`salloc`), activate the environment, run the script and terminate the job. 3. Create a minimal `toy.sh` script to be run with `sbatch`. --- ## A real ML model and a real `sbatch` script Let's clone the repository of extreme weather events detection and copy a snapshot of the code into `scratch`: ``` bash git clone git@github.com:alexhernandezgarcia/ift-3710-6759-extremeweather.git rsync --bwlimit=10mb -av ift-3710-6759-extremeweather ~/scratch/code-snapshots/ --exclude .git ``` Let's create a complete `sbatch` script, `~/sbatch/extremeweather.sh` (part 1): ``` bash #!/bin/bash #SBATCH --job-name=extremeweather # Job name #SBATCH --cpus-per-task=1 # Ask for 1 CPUs #SBATCH --mem=1Gb # Ask for 1 GB of RAM #SBATCH --output=/scratch/<username>/logs/slurm-%j-%x.out # log file #SBATCH --error=/scratch/<username>/logs/slurm-%j-%x.error # log file # Arguments # $1: Path to code directory # Copy code dir to the compute node and cd there rsync -av --relative "$1" $SLURM_TMPDIR --exclude ".git" cd $SLURM_TMPDIR/"$1" ``` --- count: false ## A real ML model and a real `sbatch` script Let's clone the repository of extreme weather events detection and copy a snapshot of the code into `scratch`: ``` bash git clone git@github.com:alexhernandezgarcia/ift-3710-6759-extremeweather.git rsync --bwlimit=10mb -av ift-3710-6759-extremeweather ~/scratch/code-snapshots/ --exclude .git ``` Let's create a complete `sbatch` script, `~/sbatch/extremeweather.sh` (part 2): ``` bash # Setup environment module purge module load StdEnv/2020 module load python/3.9.6 export PYTHONUNBUFFERED=1 virtualenv $SLURM_TMPDIR/venv source $SLURM_TMPDIR/venv/bin/activate python -m pip install --upgrade pip python -m pip install numpy pandas scikit-learn ``` --- count: false ## A real ML model and a real `sbatch` script Let's clone the repository of extreme weather events detection and copy a snapshot of the code into `scratch`: ``` bash git clone git@github.com:alexhernandezgarcia/ift-3710-6759-extremeweather.git rsync --bwlimit=10mb -av ift-3710-6759-extremeweather ~/scratch/code-snapshots/ --exclude .git ``` Let's create a complete `sbatch` script, `~/sbatch/extremeweather.sh` (part 3): ``` bash # Prints echo "Currently using:" echo $(which python) echo "in:" echo $(pwd) echo "sbatch file name: $0" python train.py --train data/train.csv --test data/test.csv ``` --- count: false ## A real ML model and a real `sbatch` script We can now submit our `sbatch` job: ``` bash sbatch sbatch/extremeweather.sh ~/scratch/code-snapshots/ift-3710-6759-extremeweather/ ``` And see the Slurm outputs in `~/scratch/logs/` .highlight1[A _very_ typical failure pattern]: "I don't understand why my jobs don't run I have no error and no trace": probably because the parent directories of the log files do not exist - Slurm will not create them if you do not tell it so .cite[(courtesy of Victor Schmidt)]. --- ## The cluster through Jupyter Lab In case all the above was way too complicated... .center[[https://ift6759.calculquebec.cloud](https://ift6759.calculquebec.cloud)] --- name: title class: title, middle ## IFT 3710/6759 ## Projets (avancés) en apprentissage automatique #### .gray224[20 janvier 2023 - Session 4] ### .gray224[Tutoriel clusters HPC] .bigger[.bigger[.highlight1[Questions, doutes, préoccupations, commentaires ?]]] .center[ <a href="http://www.umontreal.ca/"><img src="../../../assets/images/slides/logos/udem-white.png" alt="Mila" style="height: 6em"></a> ] Alex Hernández-García (he/il/él) .footer[[alexhernandezgarcia.github.io](https://alexhernandezgarcia.github.io/) | [alex.hernandez-garcia@mila.quebec](mailto:alex.hernandez-garcia@mila.quebec)]<br> .footer[[@alexhg@scholar.social](https://scholar.social/@alexhg) [](https://scholar.social/@alexhg) | [@alexhdezgcia](https://twitter.com/alexhdezgcia) [](https://twitter.com/alexhdezgcia)]